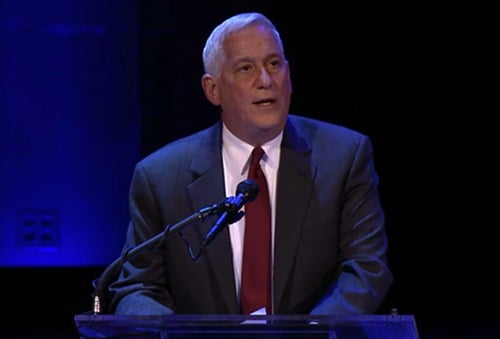

Aspen Institute President and CEO Walter Isaacson recently delivered the 2014 Jefferson Lecture. The lecture, established by the National Endowment for the Humanities, was founded in 1972 and is the highest honor the federal government grants for exceptional achievement in the humanities. Below, read the full text from the May 12th lecture at the John F. Kennedy Center for the Arts in Washington, DC. Watch the lecture on the NEH website.

“My fellow humanists,

I am deeply humbled to be here today. I know this is a standard statement to make at moments such as these, but in my case it has the added virtue of being true. There is no one on the list of Jefferson lecturers, beginning with Lionel Trilling, who is not an intellectual and artistic hero of mine, and I cannot fathom why I am part of this procession. But that makes me feel all the more humbled, so I thank you.

It is particularly meaningful for me to be giving this lecture on the 25th anniversary of the one by Walker Percy. I took the train from New York for that occasion, looking out of the window and thinking of his eerie essay about the malaise, “The Man on the Train.” If memory serves, it was over at the Mellon Auditorium, and Lynne Cheney did the introduction.

Dr. Percy, with his wry philosophical depth and lightly-worn grace, was a hero of mine. He lived on the Bogue Falaya, a bayou-like, lazy river across Lake Pontchartrain from my hometown of New Orleans. My friend Thomas was his nephew, and thus he became “Uncle Walker” to all of us kids who used to go up there to fish, capture sunning turtles, water ski, and flirt with his daughter Ann. It was not quite clear what Uncle Walker did. He had trained as a doctor, but he never practiced. Instead, he seemed to be at home most days, sipping bourbon and eating hog’s head cheese. Ann said he was a writer, but it was not until his first novel, The Moviegoer, had gained recognition that it dawned on me that writing was something you could do for a living, just like being a doctor or a fisherman or an engineer. Or a humanist.

He was a kindly gentleman, whose placid face seemed to know despair but whose eyes nevertheless often smiled. He once said: “My ideal is Thomas More, an English Catholic who wore his faith with grace, merriment, and a certain wryness.”[i] That describes Dr. Percy well.

His speech twenty-five years ago was, appropriately enough for an audience of humanists, about the limits of science. “Modern science is itself radically incoherent, not when it seeks to understand subhuman organisms and the cosmos, but when it seeks to understand man,” he said. I thought he was being a bit preachy. But then he segued into his dry, self-deprecating humor. “Surely there is nothing wrong with a humanist, even a novelist, who is getting paid by the National Endowment for the Humanities, taking a look at his colleagues across the fence, scientists getting paid by the National Science Foundation, and saying to them in the friendliest way, ‘Look, fellows, it’s none of my business, but hasn’t something gone awry over there that you might want to fix?’” He said he wasn’t pretending to have a grand insight like “the small boy noticing the naked Emperor.” Instead, he said, “It is more like whispering to a friend at a party that he’d do well to fix his fly.”[ii]

The limits of science was a subject he knew well. He had trained as a doctor and was preparing to be a psychiatrist. After contracting tuberculosis, he woke up one morning and had an epiphany. He realized science couldn’t teach us anything worth knowing about the human mind, its yearnings, depressions, and leaps of faith.

So he became a storyteller. Man is a storytelling animal, especially southerners. Alex Hailey once said to someone, who was stymied about how to give a lecture such as this, that there were six magic words to use: “Let me tell you a story.” So let me tell you a story: Percy’s novels, I eventually noticed, carried philosophical, indeed religious, messages. But when I tried to get him to expound upon them, he demurred. There are, he told me, two types of people who come out of Louisiana: preachers and storytellers. For goodness sake, he said, be a storyteller. The world has too many preachers.

For Dr. Percy, storytelling was the humanist’s way of making sense out of data. Science gives us empirical facts and theories to tie them together. Humans turn them into narratives with moral and emotional and spiritual meaning.

His specialty was the “diagnostic novel,” which played off of his scientific knowledge to diagnose the modern condition. In Love in the Ruins, Dr. Thomas Moore, a fictional descendant of the English saint, is a psychiatrist in a Louisiana town named Paradise who invents what he calls an “Ontological Lapsometer,” which can diagnose and treat our malaise.

I realized that Walker Percy’s storytelling came not just from his humanism – and certainly not from his rejection of science. Its power came because he stood at the intersection of the humanities and the sciences. He was our interface between the two.

That’s what I want to talk about today. The creativity that comes when the humanities and science interact is something that has fascinated me my whole life.

When I first started working on a biography of Steve Jobs, he told me: “I always thought of myself as a humanities person as a kid, but I liked electronics. Then I read something that one of my heroes, Edwin Land of Polaroid, said about the importance of people who could stand at the intersection of humanities and sciences, and I decided that’s what I wanted to do.”[iii]

In his product demos, Jobs would conclude with a slide, projected on the big screen behind him, of a street sign showing the intersection of the Liberal Arts and the Sciences. At his last major product launch, the iPad 2, in 2011, he ended again with those street signs and said: “It’s in Apple’s DNA that technology alone is not enough — it’s technology married with liberal arts, married with the humanities, that yields us the result that makes our heart sing.” That’s what made him the most creative technology innovator of our era, and that’s what he infused into the DNA of Apple, which is still evident today.

It used to be common for creative people to stand at this intersection. Leonardo da Vinci was the exemplar, and his famous drawing of the Vitruvian Man became the symbol, of the connection between the humanities and the sciences. “Leonardo was both artist and scientist, because in his day there was no distinction,” writes science historian Arthur I. Miller in his forthcoming book, Colliding Worlds.

Two of my biography subjects embody that combination. Benjamin Franklin was America’s founding humanist, but he was also the most important experimental scientist of his era. And his creativity came from connecting the two realms.

We sometimes think of him as a doddering dude flying a kite in the rain. But his electricity experiments established the single-fluid theory of electricity, the most important scientific breakthrough of his era. As Harvard professor Dudley Herschbach declared: “His work on electricity ushered in a scientific revolution comparable to those wrought by Newton in the previous century or by Watson and Crick in ours.”

Part of his talent as both a scientist and humanist was his facility as a clear writer, and he crafted the words we still use for electrical flow: positive and negative charges, battery, condenser, conductor.

Because he was a humanist, he looked for ways that his science could benefit society. He lamented to a friend that he was “chagrined” that the electricity experiments “have hitherto been able to discover nothing in the way of use to mankind.” He actually did come up with one use early on. He was able to apply what they learned to prepare the fall feast. He wrote, “A turkey is to be killed for our dinners by the electrical shock; and roasted by the electrical jack, before a fire kindled by the electrified bottle.” Afterwards he reported, “The birds killed in this manner eat uncommonly tender.”[iv] I think that I can speak for Dr. Percy and say that we Southerners ought to honor him as the inventor of the first fried turkey.

Of course his electricity experiments eventually led him to the most useful invention of his age: the lightning rod. Having noticed the similarity of electrical sparks and lightning bolts, he wrote in his journal the great rallying cry of the scientific method: “Let the experiments be made.”[v] And they were. He became a modern Prometheus, stealing fire from the gods. Few scientific discoveries have been of such immediate service to mankind.

Franklin’s friend and protégé, and our lecture’s patron, Thomas Jefferson, also combined a love of science with that of the humanities. The week that he became Vice President in 1797, Jefferson presented a formal research paper on fossils to the American Philosophical Society, the scientific group founded a half century earlier by young Benjamin Franklin. Jefferson became president of the organization and held that post even as he served as President of the United States.

My point is not merely that Franklin and Jefferson loved the sciences as well as the arts. It’s that they stood at the intersection of the two. They were exemplars of an Enlightenment in which natural order and Newtonian mechanical balances were the foundation for governance.

Take for example the crafting of what may be the greatest sentence ever written, the second sentence of the Declaration of Independence.

The Continental Congress had created a committee to write that document. It may have been the last time Congress created a great committee. It included Benjamin Franklin, Thomas Jefferson, and John Adams.

When he had finished a rough draft, Jefferson sent it to Franklin in late June 1776. “Will Doctor Franklin be so good as to peruse it,” he wrote in his cover note, “and suggest such alterations as his more enlarged view of the subject will dictate?”[vi] People were more polite to editors back then than they were in my day.

Franklin made only a few changes, some of which can be viewed on what Jefferson referred to as the “rough draft” of the Declaration. (This remarkable document is at the Library of Congress.) The most important of his edits was small but resounding. Jefferson had written, “We hold these truths to be sacred and undeniable…” Franklin crossed out, using the heavy backslashes that he often employed, the last three words of Jefferson’s phrase and changed it to the words now enshrined in history: “We hold these truths to be self-evident.”

The idea of “self-evident” truths came from the rationalism of Isaac Newton and Franklin’s close friend David Hume. The sentence went on to say that “all men are created equal” and “from that equal creation they derive rights.” The committee changed it to “they are endowed by their creator with certain inalienable rights.”[vii]

So here in the editing of a half of one sentence we see them balancing the role of divine providence in giving us our rights with the role of rationality and reason. The phrase became a wonderful blending of the sciences and humanities.

The other great person I wrote about who stood at the intersection of the sciences and humanities came at it from the other direction: Albert Einstein.

I have some good news for parents in this room. Einstein was no Einstein when he was a kid.

He was slow in learning how to talk. “My parents were so worried,” he later recalled, “that they consulted a doctor.” The family maid dubbed him “der Depperte,” the dopey one.[viii]

His slow development was combined with a cheeky rebelliousness toward authority, which led one schoolmaster to send him packing and another to amuse history by declaring that he would never amount to much. These traits made Albert Einstein the patron saint of distracted school kids everywhere. But they also helped to make him, or so he later surmised, the most creative scientific genius of modern times.

His cocky contempt for authority led him to question received wisdom in ways that well-trained acolytes in the academy never contemplated. And as for his slow verbal development, he thought that it allowed him to observe with wonder the everyday phenomena that others took for granted. “When I ask myself how it happened that I in particular discovered relativity theory, it seemed to lie in the following circumstance,” Einstein once explained. “The ordinary adult never bothers his head about the problems of space and time. These are things he has thought of as a child. But I developed so slowly that I began to wonder about space and time only when I was already grown up. Consequently, I probed more deeply into the problem than an ordinary child would have.”

His success came from his imagination, rebellious spirit, and his willingness to question authority. These are things the humanities teach.

He marveled at even nature’s most mundane amazements. One day, when he was sick as a child, his father gave him a compass. As he moved it around, the needle would twitch and point north, even though nothing physical was touching it. He was so excited that he trembled and grew cold. You and I remember getting a compass when we were a kid. “Oh, look, the needle points north,” we would exclaim, and then we’d move on – “Oh, look, a dead squirrel” – to something else. But throughout his life, and even on his deathbed as he scribbled equations seeking a unified field theory, Einstein marveled at how an electromagnetic field interacted with particles and related to gravity. In other words, why that needle twitched and pointed north.

His mother, an accomplished pianist, also gave him a gift at around the same time, one that likewise would have an influence throughout his life. She arranged for him to take violin lessons. After being exposed to Mozart’s sonatas, music became both magical and emotional to him.

Soon he was playing Mozart duets with his mother accompanying him on the piano. “Mozart’s music is so pure and beautiful that I see it as a reflection of the inner beauty of the universe itself,” he later told a friend.[ix] “Of course,” he added in a remark that reflected his view of math and physics as well as of Mozart, “like all great beauty, his music was pure simplicity.”[x]

Music was no mere diversion. On the contrary, it helped him think. “Whenever he felt that he had come to the end of the road or faced a difficult challenge in his work,” said his son, “he would take refuge in music and that would solve all his difficulties.”[xi] The violin thus proved useful during the years he lived alone in Berlin wrestling with general relativity. “He would often play his violin in his kitchen late at night, improvising melodies while he pondered complicated problems,” a friend recalled. “Then, suddenly, in the middle of playing, he would announce excitedly, ‘I’ve got it!’ As if by inspiration, the answer to the problem would have come to him in the midst of music.”[xii]

He had an artist’s visual imagination. He could visualize how equations were reflected in realities. As he once declared, “Imagination is more important than knowledge.”[xiii]

He also had a spiritual sense of the wonders that lay beyond science. When a young girl wrote to ask if he was religious, Einstein replied: “Everyone who is seriously involved in the pursuit of science becomes convinced that a spirit is manifest in the laws of the Universe – a spirit vastly superior to that of man, and one in the face of which we with our modest powers must feel humble.”[xiv]

At age 16, still puzzling over why that compass needle twitched and pointed north, he was studying James Clark Maxwell’s equations describing electromagnetic fields. If you look at Maxwell’s equations, or if you’re Einstein and you look at Maxwell’s equations, you notice that they decree that an electromagnetic wave, such as a light wave, always travels at the same speed relative to you, no matter if you’re moving really fast toward the source of the light or away from it. Einstein did a thought experiment. Imagine, he wrote, “a person could run after a light wave with the same speed as light.”[xv] Wouldn’t the wave seem stationary relative to this observer? But Maxwell’s equations didn’t allow for that. The disjuncture caused him such anxiety, he recalled, that his palms would sweat. I remember what was causing my palms to sweat at age 16 when I was growing up in New Orleans, and it wasn’t Maxwell’s equations. But that’s why he’s Einstein and I’m not.

He was not an academic superstar. In fact, he was rejected by the second best college in Zurich, the Zurich Polytech. I always wanted to track down the admissions director who rejected Albert Einstein. He finally got in, but when he graduated he couldn’t get a post as a teaching assistant or even as a high school teacher. He finally got a job as a third class examiner in the Swiss patent office.

Among the patent applications Einstein found himself examining were those for devices that synchronized clocks. Switzerland had just gone on standard time zones, and the Swiss, being rather Swiss, deeply cared that when it struck seven in Bern in would strike seven at that exact same instant in Basel or Geneva. The only way to synchronize distant clocks is to send a signal between them, and such a signal, such as a light or radio signal, travels at the speed of light. And you had this patent examiner who was still thinking, What if I caught up with a light beam and rode alongside it?

His imaginative leap — a thought experiment done at his desk in the patent office — was that someone travelling really fast toward one of the clocks would see the timing of the signal’s arrival slightly differently from someone travelling really fast in the other direction. Clocks that looked synchronized to one of them would not look synchronized to the other. From that he made an imaginative leap. The speed of light is always constant, he said. But time is relative, depending on your state of motion.

Now if you don’t fully get it, don’t feel bad. He was still a third-class patent clerk the next year and the year after. He couldn’t get an academic job for three more years. That’s how long it took most of the physics community to comprehend what he was saying.

Einstein’s leap was not just a triumph of the imagination. It also came from questioning accepted wisdom and challenging authority. Every other physicist had read the beginning of Newton’s Principia, where the great man writes. “Absolute, true, and mathematical time, of itself and from its own nature, flows equably without relation to anything external.” Einstein had read that, too, but unlike the others he had asked, How do we know that to be true? How would we test that proposition?

So when we emphasize the need to teach our kids science and math, we should not neglect to encourage them to be imaginative, creative, have an intuitive feel for beauty, and to “think different,” as Steve Jobs would say. That’s one role of the humanities.

Einstein had one bad effect on the connection between the humanities and the sciences. His theory of relativity, combined with quantum theory that he also pioneered, made science seem intimidating and complex, beyond the comprehension of ordinary folks, even well-educated humanists.

For nearly three centuries, the mechanical universe of Newton, based on absolute certainties and laws, had formed the psychological foundation of the Enlightenment and the social order, with a belief in causes and effects, order, even duty. Newton’s mechanics and laws of motion were something everyone could understand. But Einstein conjured up a view of the universe in which space and time were dependent on frames of reference.

Einstein’s relativity was followed by Bohr’s indeterminacy, Heisenberg’s uncertainty, Gödel’s incompleteness, and a bestiary of other unsettling concepts that made science seem spooky. This contributed to what C.P. Snow, in a somewhat overrated essay with one interesting concept, called the split between the two cultures.

My thesis is that one thing that will help restore the link between the humanities and the sciences is the human-technology symbiosis that has emerged in the digital age.

That brings us to another historical figure, not nearly as famous, but perhaps she should be: Ada Byron, the Countess of Lovelace, often credited with being, in the 1840s, the first computer programmer.

The only legitimate child of the poet Lord Byron, Ada inherited her father’s romantic spirit, a trait that her mother tried to temper by having her tutored in math, as if it were an antidote to poetic imagination. When Ada, at age five, showed a preference for geography, Lady Byron ordered that the subject be replaced by additional arithmetic lessons, and her governess soon proudly reported, “she adds up sums of five or six rows of figures with accuracy.”

Despite these efforts, Ada developed some of her father’s propensities. She had an affair as a young teenager with one of her tutors, and when they were caught and the tutor banished, Ada tried to run away from home to be with him. She was a romantic as well as a rationalist.

The resulting combination produced in Ada a love for what she took to calling “poetical science,” which linked her rebellious imagination to an enchantment with numbers.

For many people, including her father, the rarefied sensibilities of the Romantic Era clashed with the technological excitement of the Industrial Revolution. Lord Byron was a Luddite. Seriously. In his maiden and only speech to the House of Lords, he defended the followers of Nedd Ludd who were rampaging against mechanical weaving machines that were putting artisans out of work. But his daughter Ada loved how punch cards instructed those looms to weave beautiful patterns, and she envisioned how this wondrous combination of art and technology could someday be manifest in computers.

Ada’s great strength was her ability to appreciate the beauty of mathematics, something that eludes many people, including some who fancy themselves intellectual. She realized that math was a lovely language, one that describes the harmonies of the universe, and it could be poetic at times.

She became friends with Charles Babbage, a British gentleman-inventor who dreamed up a calculating machine called the Analytical Engine. To give it instructions, he adopted the punch cards that were being used by the looms.

Ada’s love of both poetry and math primed her to see “great beauty” in such a machine. She wrote a set of notes that showed how it could be programmed to do a variety of tasks. One example she chose was how to generate Bernoulli numbers. I’ve explained special relativity already, so I’m not going to take on the task of also explaining Bernoulli numbers except to say that they are an exceedingly complex infinite series that plays a role in number theory. Ada wrote charts for a step-by-step program, complete with subroutines, to generate such numbers, which is what earned her the title of first programmer.

In her notes Ada propounded two concepts of historic significance.

The first was that a programmable machine like the Analytical Engine could do more than just math. Such machines could process not only numbers but anything that could be notated in symbols, such as words or music or graphical displays. In short, she envisioned what we call a computer.

Her second significant concept was that no matter how versatile a machine became, it still would not be able to think. “The Analytical Engine has no pretensions whatever to originate anything,” she wrote. “It can do whatever we know how to order it to perform… but it has no power of anticipating any analytical relations or truths.” [xvi]

In other words, humans would supply the creativity.

This was in 1842. Flash forward one century.

Alan Turing was a brilliant and tragic English mathematician who helped build the computers that broke the German codes during World War II. He likewise came up with two concepts, both related to those of Lovelace.

The first was a formal description of a universal machine that could perform any logical operation.

Turing’s other concept addressed Lovelace’s contention that machines would never think. He called it “Lady Lovelace’s Objection.”[xvii] He asked, How would we know that? How could we test whether a machine could really think?

His answer got named the Turing Test. Put a machine and a person behind a curtain and feed them both questions, he suggested. If you cannot tell which is which, then it makes no sense to deny that the machine is thinking. This was in 1950, and he predicted that in the subsequent few decades machines would be built that would pass the Turing Test.

From Lovelace and Turing we can define two schools of thought about the relationship between humans and machines.

The Turing approach is that the ultimate goal of powerful computing is artificial intelligence: machines that can think on their own, that can learn and do everything that the human mind can do. Even everything a humanist can do.

The Lovelace approach is that machines will never truly think, and that humans will always provide the creativity and intentionality. The goal of this approach is a partnership between humans and machines, a symbiosis where each side does what it does best. Machines augment rather than replicate and replace human intelligence.

We humanists should root for the triumph of this human-machine partnership strategy, because it preserves the importance of the connection between the humanities and the sciences.

Let’s start, however, by looking at how the pursuit of pure artificial intelligence — machines that can think without us — has fared.

Ever since Mary Shelley conceived Frankenstein during a vacation with Ada’s father, Lord Byron, the prospect that a man-made contraption might have its own thoughts and intentions has been frightening. The Frankenstein motif became a staple of science fiction. A vivid example was Stanley Kubrick’s 1968 movie, “2001: A Space Odyssey,” featuring the frighteningly intelligent and intentional computer, Hal.

Artificial intelligence enthusiasts have long been promising, or threatening, that machines like Hal with minds of their own would soon emerge and prove Ada wrong. Such was the premise at the 1956 conference at Dartmouth, organized by John McCarthy and Marvin Minsky, where the field of “artificial intelligence” was launched. The conferees concluded that a breakthrough was about twenty years away. It wasn’t. Decade after decade, new waves of experts have claimed that artificial intelligence was on the visible horizon, perhaps only twenty years away. Yet true artificial intelligence has remained a mirage, always about twenty years away.

John von Neumann, the breathtakingly brilliant Hungarian-born humanist and scientist who helped devise the architecture of modern digital computers, began working on the challenge of artificial intelligence shortly before he died in 1957. He realized that the architecture of computers was fundamentally different from that of the human brain. Computers were digital and binary — they dealt in absolutely precise units — whereas the brain is partly an analog system, which deals with a continuum of possibilities. In other words, a human’s mental process includes many signal pulses and analog waves from different nerves that flow together to produce not just binary yes-no data but also answers such as “maybe” and “probably” and infinite other nuances, including occasional bafflement. Von Neumann suggested that the future of intelligent computing might require abandoning the purely digital approach and creating “mixed procedures” that include a combination of digital and analog methods.[xviii]

In 1958, Cornell professor Frank Rosenblatt published a mathematical approach for creating an artificial neural network like that of the human brain, which he called a “Perceptron.” Using weighted statistical inputs, it could, in theory, process visual data. When the Navy, which was funding the work, unveiled the system, it drew the type of press hype that has accompanied many subsequent artificial intelligence claims. “The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence,” the New York Times wrote. The New Yorker was equally enthusiastic. “The Perceptron, …as its name implies, is capable of what amounts to original thought,” it reported.[xix]

That was almost sixty years ago. The Perceptron still does not exist. However, almost every year since then there have been breathless reports about some “about-to-be marvel” that would surpass the human brain, many of them using almost the exact same phrases as the 1958 stories about the Perceptron.

Discussion about artificial intelligence flared up a bit after IBM’s Deep Blue, a chess-playing machine, beat world champion Garry Kasparov in 1997 and then Watson, its natural-language question-answering cousin, won at Jeopardy! against champions Brad Rutter and Ken Jennings in 2011. But these were not true breakthroughs of artificial intelligence. Deep Blue won its chess match by brute force; it could evaluate 200 million positions per second and match them against 700,000 past grandmaster games. Deep Blue’s calculations were fundamentally different, most of us would agree, from what we mean by “real” thinking. “Deep Blue was only intelligent the way your programmable alarm clock is intelligent,” Kasparov said. “Not that losing to a $10 million alarm clock made me feel any better.”[xx]

Likewise, Watson won at Jeopardy! by using megadoses of computing power: It had 200 million pages of information in its four terabytes of storage, of which the entire Wikipedia accounted for merely 0.2 percent of that data. It could search the equivalent of a million books per second. It was also rather good at processing colloquial English. Still, no one who watched would bet on it passing the Turing Test. For example, one question was about the “anatomical oddity” of former Olympic gymnast George Eyser. Watson answered, “What is a leg?” The correct answer was that Eyser was missing a leg. The problem was understanding an “oddity,” David Ferrucci, who ran the Watson project at IBM explained. “The computer wouldn’t know that a missing leg is odder than anything else.”[xxi]

Here’s the paradox: Computers can do some of the toughest tasks in the world (assessing billions of possible chess positions, finding correlations in hundreds of Wikipedia-sized information repositories), but they cannot perform some of the tasks that seem most simple to us mere humans. Ask Google a hard question like, “What is the depth of the Red Sea?” and it will instantly respond 7,254 feet, something even your smartest friends don’t know. Ask it an easy one like, “Can an earthworm play basketball?” and it will have no clue, even though a toddler could tell you, after a bit of giggling.[xxii]

At Applied Minds near Los Angeles, you can get an exciting look at how a robot is being programmed to maneuver, but it soon becomes apparent that it still has trouble navigating across an unfamiliar room, picking up a crayon, or writing its name. A visit to Nuance Communication near Boston shows the wondrous advances in speech recognition technologies that underpin Siri and other systems, but it’s also apparent to anyone using Siri that you still can’t have a truly meaningful conversation with a computer, except in a fantasy movie. A visit to the New York City police command system in Manhattan reveals how computers scan thousands of feeds from surveillance cameras as part of a “Domain Awareness System,” but the system still cannot reliably identify your mother’s face in a crowd.

There is one thing that all of these tasks have in common: even a four-year-old child can do them.

Perhaps in a few more decades there will be machines that think like, or appear to think like, humans. “We are continually looking at the list of things machines cannot do — play chess, drive a car, translate language — and then checking them off the list when machines become capable of these things,” said Tim Berners-Lee, who invented the World Wide Web. “Someday we will get to the end of the list.”[xxiii]

Someday we may even reach the “singularity,” a term that John von Neumann coined and the science fiction writer Vernor Vinge popularized, which is sometimes used to describe the moment when computers are not only smarter than humans but can also design themselves to be even supersmarter, and will thus no longer need us mere mortals. Vinge says this will occur by 2030.[xxiv]

On the other hand, this type of artificial intelligence may take a few more generations or even centuries. We can leave that debate to the futurists. Indeed, depending on your definition of consciousness, it may never happen. We can leave that debate to the philosophers and theologians.

There is, however, another possibility: that the partnership between humans and technology will always be more powerful than purely artificial intelligence. Call it the Ada Lovelace approach. Machines would not replace humans, she felt, but instead become their collaborators. What humans — and humanists — would bring to this relationship, she said, was originality and creativity.

The past fifty years have shown that this strategy of combining computer and human capabilities has been far more fruitful than the pursuit of machines that could think on their own.

J.C.R. Licklider, an MIT psychologist who became the foremost father of the Internet, up there with Al Gore, helped chart this course back in 1960. His ideas built on his work designing the America’s air defense system, which required an intimate collaboration between humans and machines.

Licklider set forth a vision, in a paper titled “Man-Computer Symbiosis,” that has been pursued to this day: “Human brains and computing machines will be coupled together very tightly, and the resulting partnership will think as no human brain has ever thought and process data in a way not approached by the information-handling machines we know today.”[xxv]

Licklider’s approach was given a friendly face by a computer systems pioneer named Doug Engelbart, who in 1968 demonstrated a networked computer with an interface involving a graphical display and a mouse. In a manifesto titled “Augmenting Human Intellect,” he echoed Licklider. The goal, Engelbart wrote, should be to create “an integrated domain where hunches, cut-and-try, intangibles, and the human ‘feel for a situation’ usefully coexist with… high-powered electronic aids.”[xxvi]

Richard Brautigan, a poet based at Caltech for a while, expressed that dream a bit more lyrically in his poem “Machines of Loving Grace.” It extolled “a cybernetic meadow / where mammals and computers / live together in mutually / programming harmony.”[xxvii]

The teams that built Deep Blue and Watson later adopted this symbiosis approach, rather than pursuing the objective of the artificial intelligence purists. “The goal is not to replicate human brains,” said John E. Kelly, IBM’s Research director. “This isn’t about replacing human thinking with machine thinking. Rather, in the era of cognitive systems, humans and machines will collaborate to produce better results, each bringing their own superior skills to the partnership.”[xxviii]

An example of the power of this human-machine symbiosis arose from a realization that struck Kasparov after he was beaten by Deep Blue. Even in a rule-defined game such as chess, he came to believe, “what computers are good at is where humans are weak, and vice versa.” That gave him an idea for an experiment. “What if instead of human versus machine we played as partners?”

This type of tournament was held in 2005. Players could work in teams with computers of their choice. There was a substantial prize, so many grandmasters and advanced computers joined the fray. But neither the best grandmaster nor the most powerful computer won. Symbiosis did. The final winner was not a grandmaster nor a state-of-the-art computer, but two American amateurs who used three computers at the same time and knew how to manage the process of collaborating with their machines. “Their skill at manipulating and ‘coaching’ their computers to look very deeply into positions effectively counteracted the superior chess understanding of their grandmaster opponents and the greater computational power of other participants,” according to Kasparov.[xxix] In other words, the future might belong to those who best know how to partner and collaborate with computers.

In a similar way, IBM decided that the best use of Watson, the Jeopardy!-playing computer, would be for it to collaborate with humans, rather than try to top them. One project involved reconfiguring the machine to work in partnership with doctors on cancer diagnoses and treatment plans. The Watson system was fed more than two million pages from medical journals, 600,000 pieces of clinical evidence, and could search up to 1.5 million patient records. When a doctor put in a patient’s symptoms and vital information, the computer provided a list of recommendations ranked in order of its level of confidence.[xxx]

In order to be useful, the IBM team realized, the machine needed to interact with human doctors in a humane way — a manner that made collaboration pleasant. David McQueen, the Vice President of Software at IBM Research, described programming a pretense of humility into the machine. “We reprogrammed our system to come across as humble and say, ‘here’s the percentage likelihood that this is useful to you, and here you can look for yourself.’” Doctors were delighted, saying that it felt like a conversation with a knowledgeable colleague. “We aim to combine human talents, such as our intuition, with the strengths of a machine, such as its infinite breadth,” said McQueen. “That combination is magic, because each offers a piece that the other one doesn’t have.”[xxxi]

This belief that machines and humans will get smarter together, playing to each other’s strengths and shoring up each other’s weaknesses, raises an interesting prospect: perhaps no matter how fast computers progress, artificial intelligence may never outstrip the intelligence of the human-machine partnership.

Let us assume, for example, that a machine someday exhibits all of the mental capabilities of a human: it appears to feel and perceive emotions, appreciate beauty, create art, and have its own desires. Such a machine might be able to pass a Turing Test. It might even pass what we could call the Ada Test, which is that it could appear to “originate” its own thoughts that go beyond what we humans program it to do.

There would, however, still be another hurdle before we could say that artificial intelligence has triumphed over human-technology partnership. We can call it the Licklider Test. It would go beyond asking whether a machine could replicate all the components of human intelligence. Instead, it would ask whether the machine accomplishes these tasks better when whirring away completely on its own, or whether it does them better when working in conjunction with humans. In other words, is it possible that humans and machines working in partnership will indefinitely be more powerful than an artificial intelligence machine working alone?

If so, then “man-machine symbiosis,” as Licklider called it, will remain triumphant. Artificial intelligence need not be the holy grail of computing. The goal instead could be to find ways to optimize the collaboration between human and machine capabilities — to let the machines do what they do best and have them let us do what we do best.

If this human-machine symbiosis turns out to be the wave of the future, then it will make more important those who can stand at the intersection of humanities and sciences. That interface will be the critical juncture. The future will belong to those who can appreciate both human emotions and technology’s capabilities.

This will require more than a feel for only science, technology, engineering, and math. It will also depend on those who understand aesthetics, human emotions, the arts, and the humanities.

Let’s look at two of the most brilliant contemporary innovators who understood the intersection of humans and technology: Alan Kay of Xerox PARC and Steve Jobs of Apple.

Alan Kay’s father was a physiology professor and his mother was a musician. “Since my father was a scientist and my mother was an artist, the atmosphere during my early years was full of many kinds of ideas and ways to express them,” he recalled. “I did not distinguish between ‘art’ and ‘science’ and still don’t.” He went to graduate school at the University of Utah, which then had one of the best computer graphics programs in the world. He became a fan of Doug Engelbart’s work and came up with the idea for a Dynabook, a simple and portable computer, “for children of all ages,” with a graphical interface featuring icons that you could point to and click. In other words, something resembling a MacBook Air or an iPad, thirty years ahead of its time. He went to work at Xerox PARC, where a lot of these concepts were developed.

Steve Jobs was blown away by these ideas when he saw them on visits to Xerox PARC, and he was the one who turned them into a reality with his team at Apple. As noted earlier, Jobs’s core belief was that the creativity of the new age of technology would come from those who stood at the intersection of the humanities and the sciences. He went to a very creative liberal arts college, Reed, and even after dropping out hung around to take courses like calligraphy and dance. He combined his love of beautiful lettering with his appreciation for the bit-mapped screen displays engineered at Xerox PARC, which allowed each and every pixel on the screen to be controlled by the computer. This led to the delightful array of fonts and displays he built into the first Macintosh and which we now can enjoy on every computer.

More broadly, Jobs was a genius in understanding how people would relate to their screens and devices. He understood the emotion, beauty, and simplicity that make for a great human-machine interface. And he ingrained that passion and intuition into Apple, which under Tim Cook and Jony Ive continues to startle us with designs that are profound in their simplicity.

Alan Kay and Steve Jobs are refutations of an editorial that appeared a few months ago in the Harvard Crimson, titled “Let Them Eat Code,” which poked fun at humanities-lovers who decried the emphasis on engineering and science education. The Crimson wrote:

We’re not especially sorry to see the English majors go. Increased mechanization and digitization necessitates an increased number of engineers and programmers. Humanities apologists should be able to appreciate this. It’s true that fewer humanities majors will mean fewer credentialed literary theorists and hermeneutic circles. But the complement—an increased number of students pursuing degrees in science, technology, engineering, and math—will mean a greater probability of breakthroughs in research. We refuse to rue a development that has advances in things like medicine, technological efficiency, and environmental sustainability as its natural consequence. To those who are upset with the trend, we say: Let them eat code.[xxxii]

Let me remind the Crimson editors that Bill Gates, who focused relentlessly on applied math and engineering when he was at Harvard, produced a music player called the Zune. Steve Jobs, who studied dance and calligraphy and literature at Reed, produced the iPod.

I hasten to add that I deeply admire Bill Gates as a brilliant software engineer, business pioneer, philanthropist, moral person, and (yes) humanist in the best sense. But there may be just a tiny bit of truth to Steve Jobs’s assertion about Gates: “He’d be a broader guy if he had dropped acid once or gone off to an ashram when he was younger.” At the very least, his engineering skills may have benefited a bit if he had taken a few more humanities courses at Harvard.

That leads to a final lesson, one that takes us back to Ada Lovelace. In our symbiosis with machines, we humans have brought one crucial element to the partnership: creativity. “The machines will be more rational and analytic,” IBM’s research director Kelly has said. “People will provide judgment, intuition, empathy, a moral compass, and human creativity.”

We humans can remain relevant in an era of cognitive computing because we are able to “Think Different,” something that an algorithm, almost by definition, can’t master. We possess an imagination that, as Ada said, “brings together … ideas and conceptions in new, original, endless, ever-varying combinations.” We discern patterns and appreciate their beauty. We weave information into narratives. We are storytelling animals. We have a moral sense.

Human creativity involves values, aesthetic judgments, social emotions, personal consciousness, and yes, a moral sense. These are what the arts and humanities teach us – and why those realms are as valuable to our education as science, technology, engineering, and math. If we humans are to uphold our end of the man-machine symbiosis, if we are to retain our role as partners with our machines, we must continue to nurture the humanities, the wellsprings of our creativity. That is what we bring to the party.

“I have come to regard a commitment to the humanities as nothing less than an act of intellectual defiance, of cultural dissidence,” the New Republic literary editor Leon Wieseltier told students at Brandeis a year ago. “You had the effrontery to choose interpretation over calculation, and to recognize that calculation cannot provide an accurate picture, or a profound picture, or a whole picture, of self-interpreting beings such as ourselves. There is no greater bulwark against the twittering acceleration of American consciousness than the encounter with a work of art, and the experience of a text or an image.”[xxxiii]

But enough singing to the choir. No more nodding amen. Allow me to deviate from storytelling, for just a moment, to preach the fourth part of the traditional five-part Puritan sermon, the passages that provide a bit of discomfort, perhaps even some fire and brimstone about us sinners in the hands of an angry God.

The counterpart to my paean to the humanities is also true. People who love the arts and humanities should endeavor to appreciate the beauties of math and physics, just as Ada Lovelace did. Otherwise, they will be left as bystanders at the intersection of arts and science where most digital-age creativity will occur. They will surrender control of that territory to the engineers.

Many people who extol the arts and the humanities, who applaud vigorously the paeans to their importance in our schools, will proclaim without shame (and sometimes even joke) that they don’t understand math or physics. They would consider people who don’t know Hamlet from Macbeth to be uncultured, yet they might merrily admit that they don’t know the difference between a gene and a chromosome, or a transistor and a diode, or an integral and differential equation. These things may seem hard. Yes, but so, too, isHamlet. And like Hamlet, each of these concepts is beautiful. Like an elegant mathematical equation, they are brushstrokes of the glories of the universe.

Trust me, our patron Thomas Jefferson, and his mentor Benjamin Franklin, would regard as a Philistine anyone who felt smug about not understanding math or complacent about not appreciating science.

Those who thrived in the technology revolution were people in the tradition of Ada Lovelace, who saw the beauty of both the arts and the sciences. Combining the poetical imagination of her father Byron with the mathematical imagination of her mentor Babbage, she became a patroness saint of our digital age.

The next phase of the digital revolution will bring a true fusion of technology with the creative industries, such as media, fashion, music, entertainment, education, and the arts. Until now, much of the innovation has involved pouring old wine – books, newspapers, opinion pieces, journals, songs, television shows, movies – into new digital bottles. But the interplay between technology and the creative arts will eventually result in completely new formats of media and forms of expression. Innovation will come from being able to link beauty to technology, human emotions to networks, and poetry to processors.

The people who will thrive in this future will be those who, as Steve Jobs put it, “get excited by both the humanities and technology.” In other words, they will be the spiritual heirs of Ada Lovelace, people who can connect the arts to the sciences and have a rebellious sense of wonder that opens them to the beauty of both.”

[i] Walker Percy interview, The Paris Review, Summer 1987. This draws from a piece I wrote on Percy in American Sketches (Simon and Schuster, 2009).

[ii] Walker Percy, “The Fateful Rift: The San Andreas Fault in the Modern Mind,” Jefferson Lecture, May 3, 1989.

[iii] Author’s interview with Steve Jobs.

[iv] Benjamin Franklin to Peter Collinson, Apr. 29, 1749 and Feb. 4, 1750.

[v] Benjamin Franklin to John Lining, March 18, 1755.

[vi] Thomas Jefferson to Benjamin Franklin, June 21, 1776. This draws from my Benjamin Franklin: An American Life (Simon and Schuster, 2003).

[vii] See, Pauline Maier, American Scripture (New York: Knopf, 1997); Garry Wills, Inventing America, (Garden City: Doubleday, 1978); and Carl Becker, The Declaration of Independence (New York: Random House 1922).

[viii] Albert Einstein to Sybille Blinoff, May 21, 1954. This draws from my Einstein: His Life and Universe (Simon and Schuster, 2007).

[ix] Peter Bucky, The Private Albert Einstein, (Andrews Macmeel, 1993), 156.

[x] Albert Einstein to Hans Albert Einstein, Jan. 8, 1917.

[xi] Hans Albert Einstein interview in Gerald Whitrow, Einstein: The Man and His Achievement (London: BBC, 1967), 21.

[xii] Bucky, 148.

[xiii] George Sylvester Viereck, Glimpses of the Great (New York: Macauley, 1930), 377. (First published as “What Life Means to Einstein,” Saturday Evening Post, October 26, 1929.)

[xiv] Einstein to Phyllis Wright, Jan. 24, 1936.

[xv] Albert Einstein, “Autobiographical Notes,” in Paul Arthur Schilpp, ed. Albert Einstein: Philosopher-Scientist (La Salle, Ill.: Open Court Press, 1949), 53.

[xvi] Ada, Countess of Lovelace, “Notes on Sketch of The Analytical Engine,” October, 1842.

[xvii] Alan Turing, “Computing Machinery and Intelligence,” Mind, October 1950.

[xviii] John von Neumann, The Computer and the Brain (Yale, 1958), 80.

[xix] Gary Marcus, “Hyping Artificial Intelligence, Yet Again,” New Yorker, Jan. 1, 2014, citing: “New Navy Device Learns by Doing”, (UPI wire story) New York Times, July 8, 1958; “Rival”, The New Yorker, Dec. 6, 1958.

[xx] Garry Kasparov, “The Chess Master and the Computer,” The New York Review of Books, Feb. 11, 2010; Clive Thompson, Smarter Than You Think (Penguin, 2013), 3.

[xxi] “Watson on Jeopardy,” IBM’s “Smarter Planet” website, Feb. 14, 2011.

[xxii] Gary Marcus, “Why Can’t My Computer Understand Me,” New Yorker, Aug. 16, 2013, coined the example I adapted.

[xxiii] Author’s interview with Tim Berners-Lee.

[xxiv] Vernor Vinge, “The Coming Technological Singularity,” Whole Earth Review, Winter 1993

[xxv] J.C.R. Licklider, “Man-Human Symbiosis,” IRE Transactions on Human Factors in Electronics, March 1960.

[xxvi] Douglas Engelbart, “Augmenting Human Intellect,” Prepared for the Director of Information Sciences, Air Force Office of Scientific Research, October 1962.

[xxvii] First published in limited distribution by The Communication Company, San Francisco, 1967.

[xxviii] Kelly and Hamm, 7.

[xxix] Kasparov, “The Chess Master and the Computer.”

[xxx] “Why Cognitive Systems?” IBM Research website, http://www.research.ibm.com/cognitive-computing/why-cognitive-systems.shtml.

[xxxi] Author’s interview.

[xxxii] “Let Them Eat Code,” the Harvard Crimson, Nov. 8, 2013.

[xxxiii] Leon Wielsetier, “Perhaps Culture is Now the Counterculture: A Defense of the Humanities,” The New Republic, May 28, 2013.