What might artificial intelligence mean for human autonomy, and what role might governments play in the future of AI? This August, I spent several days as one of two guest scholars discussing this question at the Aspen Institute Roundtable on Artificial Intelligence, convened by the Aspen Institute Communications and Society Program.

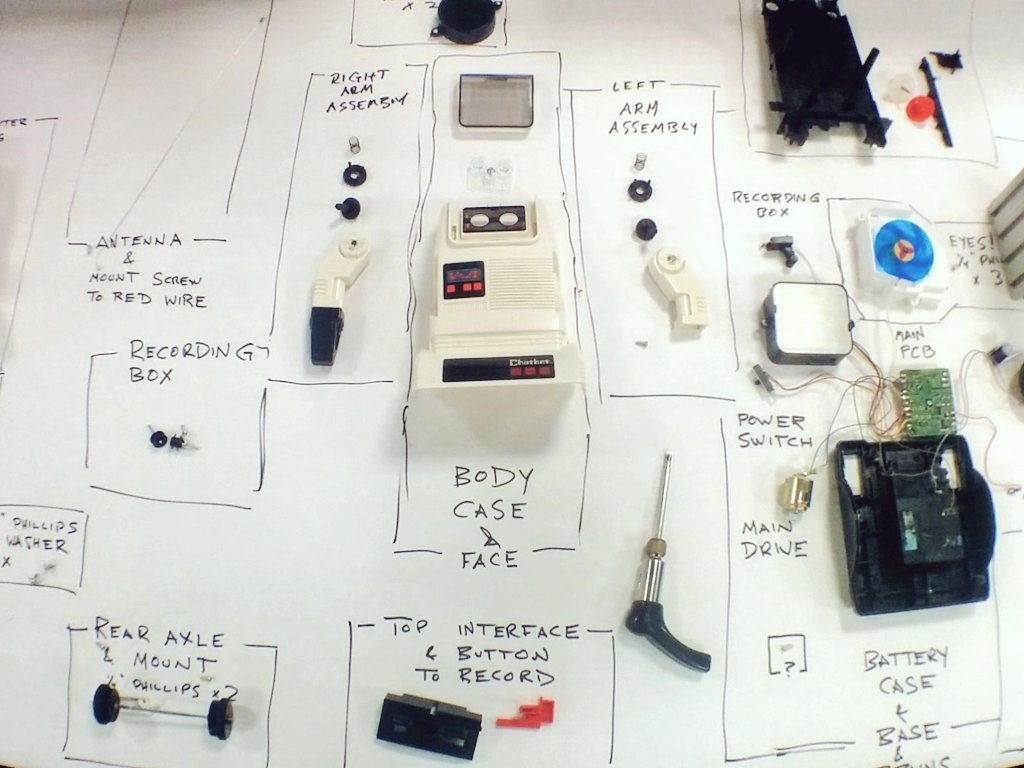

Transparent AI is a start, but like the friend who disassembled my robot discovered, looking inside can’t explain the social impact.

Transparent AI is a start, but like the friend who disassembled my robot discovered, looking inside can’t explain the social impact.

I first heard about the Aspen Institute in a class on technology and values almost fifteen years ago. The class was taught by the late Thomas Winpenny, a historian nearing the end of a long university career who trained at the Hagley program on Capitalism, Technology, and Culture. As an Irish-American who grew up in working-class Philadelphia, Tom invited us to see the human impact of technological change and ask enduring questions about power, values, and human flourishing. Throughout the class, Tom also posed emerging questions in technology ethics, requiring us to apply approaches and perspectives from the history of technology to these new questions. We also discussed Mortimer Adler’s Six Great Ideas, and the strengths and weaknesses of attempting to bring the liberal arts in conversation with power for the common good.

At the time, I was studying literature and computer science. Winpenny’s class helped me see how my two passions could contribute to public understanding and public participation in the technology transformations that shape society. The Aspen Institute Guest Scholar Program allowed me to take this into the field. At the AI roundtable I was able to meet people working in parallel spaces, share my own research findings, think critically about the direction of my work, and find new avenues for impact.

How is it even possible for a group of people to make any kind of progress on an issue as broad as human autonomy in an age of intelligent machines? As a new postdoc at Princeton having just defended my MIT PhD on governing human and machine behavior in an experimenting society, I have personally wrestled with those complexities. In one recent workshop I attended, policy experts struggled to grasp the nature of machine learning and its impact in their fields. The conversation remained much like any policy discussion. In other meetings, I have watched computer scientists, mathematicians, and corporate leaders wrestle with nuances of human ethics, issues for which they don’t always have the language, the experience, or the empirical perspective to describe. These things are hard.

Upon arrival in Aspen, I knew this gathering would be special. We were given a briefing packet with stories by Ursula K. Le Guin paired with essays by Isaiah Berlin, John Stuart Mills, and the moral philosopher Marina Oshana. The attendees invited to this event included people who are already shaping the role of artificial intelligence in society, including corporate leaders, anthropologists, journalists, foundations, government representatives, and standards organizations.

My work adds another perspective to these conversation: the voices of communities directly impacted by AI in their lives. Machine learning systems are usually deployed into communities without a systematic look at the social impacts or any conversation about what communities might need. I argue that creators of AI systems have an obligation to experiment, and that the evaluation of AI should be done with the leadership and consent of the people affected. In the long-term, I believe society needs public-interest organizations like UL to test tech products’ social impacts. That’s why I started CivilServant, a nonprofit that organizes citizen behavioral scientists to test the social impact of human and machine governance online. We also conduct independent, public-interest evaluations of social technologies, including AIs–looking beyond the inner workings of a system to understand the societal outcomes that occur when algorithms interact in complex ways with human behavior.

The AI roundtable offered a fascinating glimpse into the differing positions held by the people and institutions who attended; I’m sure that each of us came away from the wide-ranging gathering with something unique. Here are some of my own, new observations:

- Big Ideas: AI is being moved forward by companies, governments and scientists who tend to think at massive scales and who expect/predict/hope that their AI systems will inevitably define our collective future. Even if algorithms are made more transparent, they will still be making decisions across huge proportions of humanity. Ensuring the consent of the networked is a massive undertaking that is moving forward more slowly than the development of AI systems themselves.

- Strategic Thinking: as I develop a network of citizen behavioral scientists working for a fairer, safer, more understanding internet, I tend to look for successful models and inspiration from the history of citizen science. One participant challenged me: what can I learn from the failings and mistakes of these movements?

- Opportunities: Until I visited, I hadn’t realized how much Aspen is a nexus for people, ideas, and opportunities, even beyond the Institute itself. I came away from the event with a full list of follow-ups that may become fruitful opportunities for my academic research and nonprofit.

- Serendipity: while at the Institute, I unexpectedly got to speak with Walter Isaacson, whose recent article on internet anonymity was part inspiration for my own literature review on the social effects of requiring real names online.

The Communications and Society Program at the Aspen Institute is an active venue for framing policies and developing recommendations in the information and communications fields. At the AI Roundtable, we did just this. The Guest Scholar program made my participation possible, and for that, I’m ever grateful. Thank you, Aspen!

Dr. J. Nathan Matias is the 2017 Aspen Institute Roundtable on Artificial Intelligence Guest Scholar. The Communications and Society Program sponsors the guest scholarship initiative to give students of color the opportunity to foster their professional and academic career in the field of media and technology policy. Dr. Matias is a graduate of Massachusetts Institute of Technology Media Lab. Currently, he is a Postdoctoral Researcher at Princeton University Psychology, Sociology, and Center for IT Policy.

The opinions expressed in this piece are those of the author and may not necessarily represent the view of the Aspen Institute.